A new frontier for virtual edge-case testing to ensure VRU safety in era of autonomous driving

A new frontier for virtual edge-case testing to ensure VRU safety in era of autonomous driving

By Ekim Yurtsever, Ph.D.

Research Associate 2-Engineer

The Ohio State University, Center for Automotive Research

Our mission at The Ohio State University Center for Automotive Research (CAR) is to become a catalyst in intelligent transportation systems research through interdisciplinary collaborations, both regionally and nationally. VRU safety is an area where strong interdisciplinary collaboration, especially in computer vision, machine learning, and intelligent transportation systems fields, is needed to improve safety at all levels.

Ensuring VRU safety is critical for deploying autonomous vehicles on the roads. Rigorous testing and safety analysis are crucial to this end. However, testing self-driving cars on edge-case scenarios is a complex task. Collisions or near-collision events with cyclists, pedestrians, and wheelchair users cannot be tested with human subjects.

One alternative to real-world tests is creating edge-case scenarios in virtual environments. Dangerous collision events can be generated in simulations to test the robustness of autonomous driving algorithms. However, with their conventional computer graphics pipelines, most driving simulators generate non-photorealistic imagery and lack visual fidelity. Visual fidelity is vital for evaluating deep learning-based perception systems of autonomous vehicles. Without photorealistic simulators, virtual testing cannot ensure VRU safety.

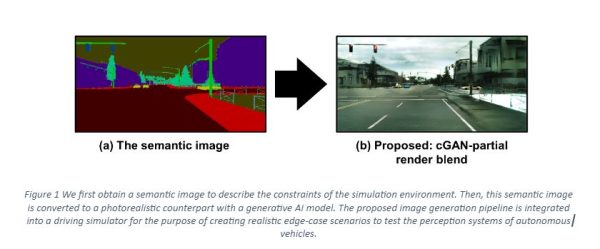

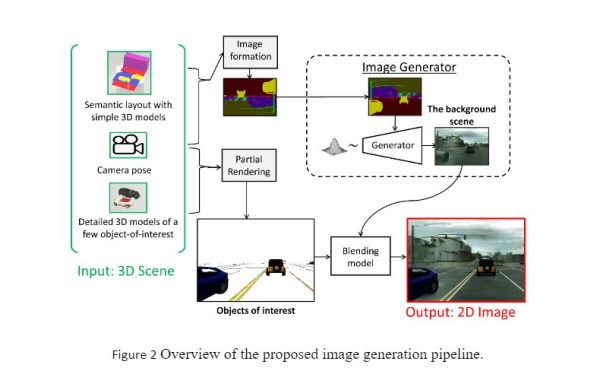

We aim to alleviate this issue by increasing the visual fidelity of conventional driving simulators with AI. Our main idea is twofold: first, we obtain a textureless semantic image through a conventional graphics pipeline. Then, we convert this semantic image to a photorealistic background scene with a Generative Adversarial Neural Network trained on real-world driving data, as shown in Figure 1. Simultaneously, we render certain objects of interest conventionally. This step is necessary to have full control of the scene’s composition. Finally, we blend both images with a blending model to create the output image. The overall architecture of our method is shown in Figure 2.

Our experiments show that the proposed method can generate high-fidelity imagery while retaining semantic constraints. Future work will utilize this virtual environment to generate edge-case scenarios involving VRUs.

This study [1] was published in the journal IEEE Transactions on Intelligent Transportation Systems.

[1] Yurtsever, E., Yang, D., Koc, I. M., & Redmill, K. A. (2022). Photorealism in Driving Simulations: Blending Generative Adversarial Image Synthesis With Rendering. IEEE Transactions on Intelligent Transportation Systems, 23(12), 23114-23123.

Signup For The Bike Lane Newsletter